Merge branch 'main' into main

18

docs/16x_prompt/README.md

Normal file

|

|

@ -0,0 +1,18 @@

|

|||

# [16x Prompt](https://prompt.16x.engineer/)

|

||||

|

||||

AI Coding with Context Management.

|

||||

|

||||

16x Prompt helps developers manage source code context and craft prompts for complex coding tasks on existing codebases.

|

||||

|

||||

# UI

|

||||

|

||||

|

||||

|

||||

## Integrate with DeepSeek API

|

||||

|

||||

1. Click on the model selection button at bottom right

|

||||

2. Click on "DeepSeek API" to automatically fill in API Endpoint

|

||||

3. Enter model ID, for example `deepseek-chat` (for DeepSeek V3) or `deepseek-reasoner` (for DeepSeek R1)

|

||||

4. Enter your API key

|

||||

|

||||

|

||||

BIN

docs/16x_prompt/assets/16x_prompt_integration.png

Normal file

|

After Width: | Height: | Size: 646 KiB |

BIN

docs/16x_prompt/assets/16x_prompt_ui.png

Normal file

|

After Width: | Height: | Size: 675 KiB |

17

docs/Geneplore AI/README.md

Normal file

|

|

@ -0,0 +1,17 @@

|

|||

# [Geneplore AI](https://geneplore.com/bot)

|

||||

|

||||

## Geneplore AI is building the world's easiest way to use AI - Use 50+ models, all on Discord

|

||||

|

||||

Chat with the all-new Deepseek v3, GPT-4o, Claude 3 Opus, LLaMA 3, Gemini Pro, FLUX.1, and ChatGPT with **one bot**. Generate videos with Stable Diffusion Video, and images with the newest and most popular models available.

|

||||

|

||||

Don't like how the bot responds? Simply change the model in *seconds* and continue chatting like normal, without adding another bot to your server. No more fiddling with API keys and webhooks - every model is completely integrated into the bot.

|

||||

|

||||

**NEW:** Try the most powerful open AI model, Deepseek v3, for free with our bot. Simply type /chat and select Deepseek in the model list.

|

||||

|

||||

|

||||

|

||||

Use the bot trusted by over 60,000 servers and hundreds of paying subscribers, without the hassle of multiple $20/month subscriptions and complicated programming.

|

||||

|

||||

https://geneplore.com

|

||||

|

||||

© 2025 Geneplore AI, All Rights Reserved.

|

||||

12

docs/Ncurator/README.md

Normal file

|

|

@ -0,0 +1,12 @@

|

|||

<img src="./assets/logo.png" width="64" height="auto" />

|

||||

|

||||

# [Ncurator](https://www.ncurator.com)

|

||||

|

||||

Knowledge Base AI Q&A Assistant -

|

||||

Let AI help you organize and analyze knowledge

|

||||

|

||||

## UI

|

||||

<img src="./assets/screenshot3.png" width="360" height="auto" />

|

||||

|

||||

## Integrate with Deepseek API

|

||||

<img src="./assets/screenshot2.png" width="360" height="auto" />

|

||||

11

docs/Ncurator/README_cn.md

Normal file

|

|

@ -0,0 +1,11 @@

|

|||

<img src="./assets/logo.png" width="64" height="auto" />

|

||||

|

||||

# [Ncurator](https://www.ncurator.com)

|

||||

|

||||

知识库AI问答助手-让AI帮助你整理与分析知识

|

||||

|

||||

## UI

|

||||

<img src="./assets/screenshot1.png" width="360" height="auto" />

|

||||

|

||||

## 配置 Deepseek API

|

||||

<img src="./assets/screenshot2.png" width="360" height="auto" />

|

||||

BIN

docs/Ncurator/assets/logo.png

Normal file

|

After Width: | Height: | Size: 99 KiB |

BIN

docs/Ncurator/assets/screenshot1.png

Normal file

|

After Width: | Height: | Size: 178 KiB |

BIN

docs/Ncurator/assets/screenshot2.png

Normal file

|

After Width: | Height: | Size: 96 KiB |

BIN

docs/Ncurator/assets/screenshot3.png

Normal file

|

After Width: | Height: | Size: 180 KiB |

|

|

@ -25,16 +25,14 @@ return {

|

|||

lazy = false,

|

||||

version = false, -- set this if you want to always pull the latest change

|

||||

opts = {

|

||||

provider = "openai",

|

||||

auto_suggestions_provider = "openai", -- Since auto-suggestions are a high-frequency operation and therefore expensive, it is recommended to specify an inexpensive provider or even a free provider: copilot

|

||||

openai = {

|

||||

endpoint = "https://api.deepseek.com/v1",

|

||||

model = "deepseek-chat",

|

||||

timeout = 30000, -- Timeout in milliseconds

|

||||

temperature = 0,

|

||||

max_tokens = 4096,

|

||||

-- optional

|

||||

api_key_name = "OPENAI_API_KEY", -- default OPENAI_API_KEY if not set

|

||||

provider = "deepseek",

|

||||

vendors = {

|

||||

deepseek = {

|

||||

__inherited_from = "openai",

|

||||

api_key_name = "DEEPSEEK_API_KEY",

|

||||

endpoint = "https://api.deepseek.com",

|

||||

model = "deepseek-coder",

|

||||

},

|

||||

},

|

||||

},

|

||||

-- if you want to build from source then do `make BUILD_FROM_SOURCE=true`

|

||||

|

|

|

|||

|

|

@ -25,16 +25,14 @@ return {

|

|||

lazy = false,

|

||||

version = false, -- set this if you want to always pull the latest change

|

||||

opts = {

|

||||

provider = "openai",

|

||||

auto_suggestions_provider = "openai", -- Since auto-suggestions are a high-frequency operation and therefore expensive, it is recommended to specify an inexpensive provider or even a free provider: copilot

|

||||

openai = {

|

||||

endpoint = "https://api.deepseek.com/v1",

|

||||

model = "deepseek-chat",

|

||||

timeout = 30000, -- Timeout in milliseconds

|

||||

temperature = 0,

|

||||

max_tokens = 4096,

|

||||

-- optional

|

||||

api_key_name = "OPENAI_API_KEY", -- default OPENAI_API_KEY if not set

|

||||

provider = "deepseek",

|

||||

vendors = {

|

||||

deepseek = {

|

||||

__inherited_from = "openai",

|

||||

api_key_name = "DEEPSEEK_API_KEY",

|

||||

endpoint = "https://api.deepseek.com",

|

||||

model = "deepseek-coder",

|

||||

},

|

||||

},

|

||||

},

|

||||

-- if you want to build from source then do `make BUILD_FROM_SOURCE=true`

|

||||

|

|

|

|||

|

|

@ -34,9 +34,8 @@ return {

|

|||

require("codecompanion").setup({

|

||||

adapters = {

|

||||

deepseek = function()

|

||||

return require("codecompanion.adapters").extend("openai_compatible", {

|

||||

return require("codecompanion.adapters").extend("deepseek", {

|

||||

env = {

|

||||

url = "https://api.deepseek.com",

|

||||

api_key = "YOUR_API_KEY",

|

||||

},

|

||||

})

|

||||

|

|

@ -71,9 +70,8 @@ later(function()

|

|||

require("codecompanion").setup({

|

||||

adapters = {

|

||||

deepseek = function()

|

||||

return require("codecompanion.adapters").extend("openai_compatible", {

|

||||

return require("codecompanion.adapters").extend("deepseek", {

|

||||

env = {

|

||||

url = "https://api.deepseek.com",

|

||||

api_key = "YOUR_API_KEY",

|

||||

},

|

||||

})

|

||||

|

|

|

|||

|

|

@ -34,9 +34,8 @@ return {

|

|||

require("codecompanion").setup({

|

||||

adapters = {

|

||||

deepseek = function()

|

||||

return require("codecompanion.adapters").extend("openai_compatible", {

|

||||

return require("codecompanion.adapters").extend("deepseek", {

|

||||

env = {

|

||||

url = "https://api.deepseek.com",

|

||||

api_key = "YOUR_API_KEY",

|

||||

},

|

||||

})

|

||||

|

|

@ -71,9 +70,8 @@ later(function()

|

|||

require("codecompanion").setup({

|

||||

adapters = {

|

||||

deepseek = function()

|

||||

return require("codecompanion.adapters").extend("openai_compatible", {

|

||||

return require("codecompanion.adapters").extend("deepseek", {

|

||||

env = {

|

||||

url = "https://api.deepseek.com",

|

||||

api_key = "YOUR_API_KEY",

|

||||

},

|

||||

})

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

<img src="https://github.com/deepseek-ai/awesome-deepseek-integration/assets/59196087/e4d082de-6f64-44b9-beaa-0de55d70cfab" width="64" height="auto" />

|

||||

<img src="https://github.com/continuedev/continue/blob/main/docs/static/img/logo.png?raw=true" width="64" height="auto" />

|

||||

|

||||

# [Continue](https://continue.dev/)

|

||||

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

<img src="https://github.com/deepseek-ai/awesome-deepseek-integration/assets/59196087/e4d082de-6f64-44b9-beaa-0de55d70cfab" width="64" height="auto" />

|

||||

<img src="https://github.com/continuedev/continue/blob/main/docs/static/img/logo.png?raw=true" width="64" height="auto" />

|

||||

|

||||

# [Continue](https://continue.dev/)

|

||||

|

||||

|

|

|

|||

30

docs/curator/README.md

Normal file

|

|

@ -0,0 +1,30 @@

|

|||

|

||||

|

||||

|

||||

|

||||

# [Curator](https://github.com/bespokelabsai/curator)

|

||||

|

||||

|

||||

Curator is an open-source tool to curate large scale datasets for post-training LLMs.

|

||||

|

||||

Curator was used to curate [Bespoke-Stratos-17k](https://huggingface.co/datasets/bespokelabs/Bespoke-Stratos-17k), a reasoning dataset to train a fully open reasoning model [Bespoke-Stratos](https://www.bespokelabs.ai/blog/bespoke-stratos-the-unreasonable-effectiveness-of-reasoning-distillation).

|

||||

|

||||

|

||||

### Curator supports:

|

||||

|

||||

- Calling Deepseek API for scalable synthetic data curation

|

||||

- Easy structured data extraction

|

||||

- Caching and automatic recovery

|

||||

- Dataset visualization

|

||||

- Saving $$$ using batch mode

|

||||

|

||||

### Call Deepseek API with Curator easily:

|

||||

|

||||

|

||||

|

||||

# Get Started here

|

||||

|

||||

- [Colab Example](https://colab.research.google.com/drive/1Z78ciwHIl_ytACzcrslNrZP2iwK05eIF?usp=sharing)

|

||||

- [Github Repo](https://github.com/bespokelabsai/curator)

|

||||

- [Documentation](https://docs.bespokelabs.ai/)

|

||||

- [Discord](https://discord.com/invite/KqpXvpzVBS)

|

||||

29

docs/curator/README_cn.md

Normal file

|

|

@ -0,0 +1,29 @@

|

|||

|

||||

|

||||

|

||||

# [Curator](https://github.com/bespokelabsai/curator)

|

||||

|

||||

|

||||

Curator 是一个用于后训练大型语言模型 (LLMs) 和结构化数据提取的制作与管理可扩展的数据集的开源工具。

|

||||

|

||||

Curator 被用来制作 [Bespoke-Stratos-17k](https://huggingface.co/datasets/bespokelabs/Bespoke-Stratos-17k),这是一个用于训练完全开源的推理模型 [Bespoke-Stratos](https://www.bespokelabs.ai/blog/bespoke-stratos-the-unreasonable-effectiveness-of-reasoning-distillation) 的推理数据集。

|

||||

|

||||

|

||||

### Curator 支持:

|

||||

|

||||

- 调用 Deepseek API 进行可扩展的合成数据管理

|

||||

- 简便的结构化数据提取

|

||||

- 缓存和自动恢复

|

||||

- 数据集可视化

|

||||

- 使用批处理模式节省费用

|

||||

|

||||

### 轻松使用 Curator 调用 Deepseek API:

|

||||

|

||||

|

||||

|

||||

# 从这里开始

|

||||

|

||||

- [Colab 示例](https://colab.research.google.com/drive/1Z78ciwHIl_ytACzcrslNrZP2iwK05eIF?usp=sharing)

|

||||

- [Github 仓库](https://github.com/bespokelabsai/curator)

|

||||

- [文档](https://docs.bespokelabs.ai/)

|

||||

- [Discord](https://discord.com/invite/KqpXvpzVBS)

|

||||

31

docs/stranslate/README.md

Normal file

|

|

@ -0,0 +1,31 @@

|

|||

<img src="./assets/stranslate.svg" width="64" height="auto" />

|

||||

|

||||

# [`STranslate`](https://stranslate.zggsong.com/)

|

||||

|

||||

STranslate is a translation and OCR tool that is ready to use on the go.

|

||||

|

||||

## Translation

|

||||

|

||||

Supports multiple translation languages and various translation methods such as input, text selection, screenshot, clipboard monitoring, and mouse text selection. It also allows displaying multiple service translation results simultaneously for easy comparison.

|

||||

|

||||

## OCR

|

||||

|

||||

Supports fully offline OCR for Chinese, English, Japanese, and Korean, based on PaddleOCR, with excellent performance and quick response. It supports screenshot, clipboard, and file OCR, as well as silent OCR. Additionally, it supports OCR services from WeChat, Baidu, Tencent, OpenAI, and Google.

|

||||

|

||||

## Services

|

||||

|

||||

Supports integration with over ten translation services including DeepSeek, OpenAI, Gemini, ChatGLM, Baidu, Microsoft, Tencent, Youdao, and Alibaba. It also offers free API options. Built-in services like Microsoft, Yandex, Google, and Kingsoft PowerWord are ready to use out of the box.

|

||||

|

||||

## Features

|

||||

|

||||

Supports back-translation, global TTS, writing (directly translating and replacing selected content), custom prompts, QR code recognition, external calls, and more.

|

||||

|

||||

## Main Interface

|

||||

|

||||

|

||||

|

||||

## Configuration

|

||||

|

||||

|

||||

|

||||

|

||||

31

docs/stranslate/README_cn.md

Normal file

|

|

@ -0,0 +1,31 @@

|

|||

<img src="./assets/stranslate.svg" width="64" height="auto" />

|

||||

|

||||

# [`STranslate`](https://stranslate.zggsong.com/)

|

||||

|

||||

STranslate 是一款即用即走的翻译、OCR工具

|

||||

|

||||

## 翻译

|

||||

|

||||

支持多种翻译语言,支持输入、划词、截图、监听剪贴板、监听鼠标划词等多种翻译方式,支持同时显示多个服务翻译结果,方便比较翻译结果

|

||||

|

||||

## OCR

|

||||

|

||||

支持中英日韩完全离线OCR,基于 PaddleOCR,效果优秀反应迅速,支持截图、剪贴板、文件OCR,支持静默OCR,同时支持微信、百度、腾讯、OpenAI、Google等OCR

|

||||

|

||||

## 服务

|

||||

|

||||

支持DeepSeek、OpenAI、Gemini、ChatGLM、百度、微软、腾讯、有道、阿里等十多家翻译服务接入;同时还提供免费API可供选择;内置微软、Yandex、Google、金山词霸等内置服务可做到开箱即用

|

||||

|

||||

## 特色

|

||||

|

||||

支持回译、全局TTS、写作(选中后直接翻译替换内容)、自定义Prompt、二维码识别、外部调用等等功能

|

||||

|

||||

## 主界面

|

||||

|

||||

|

||||

|

||||

## 配置

|

||||

|

||||

|

||||

|

||||

|

||||

BIN

docs/stranslate/assets/main.png

Normal file

|

After Width: | Height: | Size: 133 KiB |

BIN

docs/stranslate/assets/settings_1.png

Normal file

|

After Width: | Height: | Size: 204 KiB |

BIN

docs/stranslate/assets/settings_2.png

Normal file

|

After Width: | Height: | Size: 50 KiB |

2

docs/stranslate/assets/stranslate.svg

Normal file

|

|

@ -0,0 +1,2 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<svg data-name="Layer 1" id="Layer_1" viewBox="0 0 32 32" xmlns="http://www.w3.org/2000/svg"><defs><style>.cls-1{fill:#ba63c6;}</style></defs><title/><path class="cls-1" d="M31.79,28.11l-7-14a2,2,0,0,0-3.58,0L18,20.56,15.3,18.73A17.13,17.13,0,0,0,19.91,9H22a2,2,0,0,0,0-4H14V3a2,2,0,0,0-4,0V5H2A2,2,0,0,0,2,9H15.86a13.09,13.09,0,0,1-3.79,7.28,13.09,13.09,0,0,1-3.19-4.95,2,2,0,1,0-3.77,1.34A17.1,17.1,0,0,0,8.9,18.75L3.84,22.37a2,2,0,0,0,2.33,3.25l5.93-4.24,4.08,2.79-2,3.93a2,2,0,0,0,3.58,1.79l.45-.89H23a2,2,0,0,0,0-4H20.24L23,19.47l5.21,10.42a2,2,0,0,0,3.58-1.79Z"/></svg>

|

||||

|

After Width: | Height: | Size: 614 B |

33

docs/superagentx/README.md

Normal file

|

|

@ -0,0 +1,33 @@

|

|||

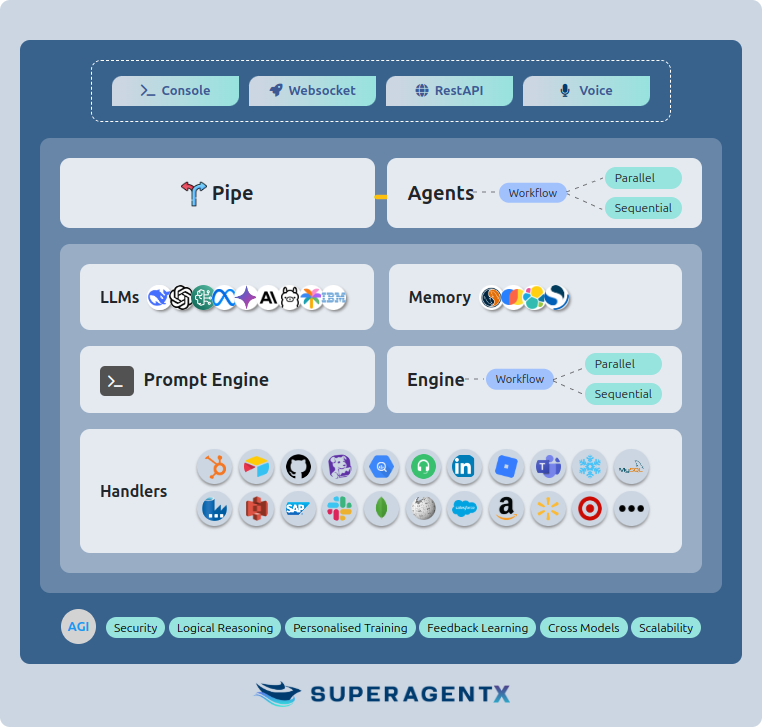

# `SuperAgentX`

|

||||

|

||||

> 🤖 SuperAgentX: A lightweight autonomous true multi-agent framework with AGI capabilities.

|

||||

|

||||

**SuperAgentX Source Code**: [https://github.com/superagentxai/superagentx](https://github.com/superagentxai/superagentx)

|

||||

|

||||

**DeepSeek AI Agent Example**: [https://github.com/superagentxai/superagentx/blob/master/tests/llm/test_deepseek_client.py](https://github.com/superagentxai/superagentx/blob/master/tests/llm/test_deepseek_client.py)

|

||||

|

||||

**Documentation** : [https://docs.superagentx.ai/](https://docs.superagentx.ai/)

|

||||

|

||||

The SuperAgentX framework integrates DeepSeek as its LLM service provider, enhancing the multi-agent's reasoning and decision-making capabilities.

|

||||

|

||||

## 🤖 Introduction

|

||||

|

||||

`SuperAgentX` SuperAgentX is an advanced agentic AI framework designed to accelerate the development of Artificial General Intelligence (AGI). It provides a powerful, modular, and flexible platform for building autonomous AI agents capable of executing complex tasks with minimal human intervention.

|

||||

|

||||

|

||||

|

||||

### ✨ Key Features

|

||||

|

||||

🚀 Open-Source Framework: A lightweight, open-source AI framework built for multi-agent applications with Artificial General Intelligence (AGI) capabilities.

|

||||

|

||||

🎯 Goal-Oriented Multi-Agents: This technology enables the creation of agents with retry mechanisms to achieve set goals. Communication between agents is Parallel, Sequential, or hybrid.

|

||||

|

||||

🏖️ Easy Deployment: Offers WebSocket, RESTful API, and IO console interfaces for rapid setup of agent-based AI solutions.

|

||||

|

||||

♨️ Streamlined Architecture: Enterprise-ready scalable and pluggable architecture. No major dependencies; built independently!

|

||||

|

||||

📚 Contextual Memory: Uses SQL + Vector databases to store and retrieve user-specific context effectively.

|

||||

|

||||

🧠 Flexible LLM Configuration: Supports simple configuration options of various Gen AI models.

|

||||

|

||||

🤝🏻 Extendable Handlers: Allows integration with diverse APIs, databases, data warehouses, data lakes, IoT streams, and more, making them accessible for function-calling features.

|

||||

BIN

docs/superagentx/assets/architecture.png

Normal file

|

After Width: | Height: | Size: 99 KiB |