mirror of

https://github.com/deepseek-ai/awesome-deepseek-integration.git

synced 2025-10-13 20:11:30 -04:00

Merge branch 'main' into main

This commit is contained in:

commit

f33ffc1f18

20 changed files with 632 additions and 50 deletions

17

docs/Geneplore AI/README.md

Normal file

17

docs/Geneplore AI/README.md

Normal file

|

|

@ -0,0 +1,17 @@

|

|||

# [Geneplore AI](https://geneplore.com/bot)

|

||||

|

||||

## Geneplore AI is building the world's easiest way to use AI - Use 50+ models, all on Discord

|

||||

|

||||

Chat with the all-new Deepseek v3, GPT-4o, Claude 3 Opus, LLaMA 3, Gemini Pro, FLUX.1, and ChatGPT with **one bot**. Generate videos with Stable Diffusion Video, and images with the newest and most popular models available.

|

||||

|

||||

Don't like how the bot responds? Simply change the model in *seconds* and continue chatting like normal, without adding another bot to your server. No more fiddling with API keys and webhooks - every model is completely integrated into the bot.

|

||||

|

||||

**NEW:** Try the most powerful open AI model, Deepseek v3, for free with our bot. Simply type /chat and select Deepseek in the model list.

|

||||

|

||||

|

||||

|

||||

Use the bot trusted by over 60,000 servers and hundreds of paying subscribers, without the hassle of multiple $20/month subscriptions and complicated programming.

|

||||

|

||||

https://geneplore.com

|

||||

|

||||

© 2025 Geneplore AI, All Rights Reserved.

|

||||

12

docs/Ncurator/README.md

Normal file

12

docs/Ncurator/README.md

Normal file

|

|

@ -0,0 +1,12 @@

|

|||

<img src="./assets/logo.png" width="64" height="auto" />

|

||||

|

||||

# [Ncurator](https://www.ncurator.com)

|

||||

|

||||

Knowledge Base AI Q&A Assistant -

|

||||

Let AI help you organize and analyze knowledge

|

||||

|

||||

## UI

|

||||

<img src="./assets/screenshot3.png" width="360" height="auto" />

|

||||

|

||||

## Integrate with Deepseek API

|

||||

<img src="./assets/screenshot2.png" width="360" height="auto" />

|

||||

11

docs/Ncurator/README_cn.md

Normal file

11

docs/Ncurator/README_cn.md

Normal file

|

|

@ -0,0 +1,11 @@

|

|||

<img src="./assets/logo.png" width="64" height="auto" />

|

||||

|

||||

# [Ncurator](https://www.ncurator.com)

|

||||

|

||||

知识库AI问答助手-让AI帮助你整理与分析知识

|

||||

|

||||

## UI

|

||||

<img src="./assets/screenshot1.png" width="360" height="auto" />

|

||||

|

||||

## 配置 Deepseek API

|

||||

<img src="./assets/screenshot2.png" width="360" height="auto" />

|

||||

BIN

docs/Ncurator/assets/logo.png

Normal file

BIN

docs/Ncurator/assets/logo.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 99 KiB |

BIN

docs/Ncurator/assets/screenshot1.png

Normal file

BIN

docs/Ncurator/assets/screenshot1.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 178 KiB |

BIN

docs/Ncurator/assets/screenshot2.png

Normal file

BIN

docs/Ncurator/assets/screenshot2.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 96 KiB |

BIN

docs/Ncurator/assets/screenshot3.png

Normal file

BIN

docs/Ncurator/assets/screenshot3.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 180 KiB |

|

|

@ -25,16 +25,14 @@ return {

|

|||

lazy = false,

|

||||

version = false, -- set this if you want to always pull the latest change

|

||||

opts = {

|

||||

provider = "openai",

|

||||

auto_suggestions_provider = "openai", -- Since auto-suggestions are a high-frequency operation and therefore expensive, it is recommended to specify an inexpensive provider or even a free provider: copilot

|

||||

openai = {

|

||||

endpoint = "https://api.deepseek.com/v1",

|

||||

model = "deepseek-chat",

|

||||

timeout = 30000, -- Timeout in milliseconds

|

||||

temperature = 0,

|

||||

max_tokens = 4096,

|

||||

-- optional

|

||||

api_key_name = "OPENAI_API_KEY", -- default OPENAI_API_KEY if not set

|

||||

provider = "deepseek",

|

||||

vendors = {

|

||||

deepseek = {

|

||||

__inherited_from = "openai",

|

||||

api_key_name = "DEEPSEEK_API_KEY",

|

||||

endpoint = "https://api.deepseek.com",

|

||||

model = "deepseek-coder",

|

||||

},

|

||||

},

|

||||

},

|

||||

-- if you want to build from source then do `make BUILD_FROM_SOURCE=true`

|

||||

|

|

|

|||

|

|

@ -25,16 +25,14 @@ return {

|

|||

lazy = false,

|

||||

version = false, -- set this if you want to always pull the latest change

|

||||

opts = {

|

||||

provider = "openai",

|

||||

auto_suggestions_provider = "openai", -- Since auto-suggestions are a high-frequency operation and therefore expensive, it is recommended to specify an inexpensive provider or even a free provider: copilot

|

||||

openai = {

|

||||

endpoint = "https://api.deepseek.com/v1",

|

||||

model = "deepseek-chat",

|

||||

timeout = 30000, -- Timeout in milliseconds

|

||||

temperature = 0,

|

||||

max_tokens = 4096,

|

||||

-- optional

|

||||

api_key_name = "OPENAI_API_KEY", -- default OPENAI_API_KEY if not set

|

||||

provider = "deepseek",

|

||||

vendors = {

|

||||

deepseek = {

|

||||

__inherited_from = "openai",

|

||||

api_key_name = "DEEPSEEK_API_KEY",

|

||||

endpoint = "https://api.deepseek.com",

|

||||

model = "deepseek-coder",

|

||||

},

|

||||

},

|

||||

},

|

||||

-- if you want to build from source then do `make BUILD_FROM_SOURCE=true`

|

||||

|

|

|

|||

|

|

@ -34,9 +34,8 @@ return {

|

|||

require("codecompanion").setup({

|

||||

adapters = {

|

||||

deepseek = function()

|

||||

return require("codecompanion.adapters").extend("openai_compatible", {

|

||||

return require("codecompanion.adapters").extend("deepseek", {

|

||||

env = {

|

||||

url = "https://api.deepseek.com",

|

||||

api_key = "YOUR_API_KEY",

|

||||

},

|

||||

})

|

||||

|

|

@ -71,9 +70,8 @@ later(function()

|

|||

require("codecompanion").setup({

|

||||

adapters = {

|

||||

deepseek = function()

|

||||

return require("codecompanion.adapters").extend("openai_compatible", {

|

||||

return require("codecompanion.adapters").extend("deepseek", {

|

||||

env = {

|

||||

url = "https://api.deepseek.com",

|

||||

api_key = "YOUR_API_KEY",

|

||||

},

|

||||

})

|

||||

|

|

|

|||

|

|

@ -34,9 +34,8 @@ return {

|

|||

require("codecompanion").setup({

|

||||

adapters = {

|

||||

deepseek = function()

|

||||

return require("codecompanion.adapters").extend("openai_compatible", {

|

||||

return require("codecompanion.adapters").extend("deepseek", {

|

||||

env = {

|

||||

url = "https://api.deepseek.com",

|

||||

api_key = "YOUR_API_KEY",

|

||||

},

|

||||

})

|

||||

|

|

@ -71,9 +70,8 @@ later(function()

|

|||

require("codecompanion").setup({

|

||||

adapters = {

|

||||

deepseek = function()

|

||||

return require("codecompanion.adapters").extend("openai_compatible", {

|

||||

return require("codecompanion.adapters").extend("deepseek", {

|

||||

env = {

|

||||

url = "https://api.deepseek.com",

|

||||

api_key = "YOUR_API_KEY",

|

||||

},

|

||||

})

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

<img src="https://github.com/deepseek-ai/awesome-deepseek-integration/assets/59196087/e4d082de-6f64-44b9-beaa-0de55d70cfab" width="64" height="auto" />

|

||||

<img src="https://github.com/continuedev/continue/blob/main/docs/static/img/logo.png?raw=true" width="64" height="auto" />

|

||||

|

||||

# [Continue](https://continue.dev/)

|

||||

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

<img src="https://github.com/deepseek-ai/awesome-deepseek-integration/assets/59196087/e4d082de-6f64-44b9-beaa-0de55d70cfab" width="64" height="auto" />

|

||||

<img src="https://github.com/continuedev/continue/blob/main/docs/static/img/logo.png?raw=true" width="64" height="auto" />

|

||||

|

||||

# [Continue](https://continue.dev/)

|

||||

|

||||

|

|

|

|||

30

docs/curator/README.md

Normal file

30

docs/curator/README.md

Normal file

|

|

@ -0,0 +1,30 @@

|

|||

|

||||

|

||||

|

||||

|

||||

# [Curator](https://github.com/bespokelabsai/curator)

|

||||

|

||||

|

||||

Curator is an open-source tool to curate large scale datasets for post-training LLMs.

|

||||

|

||||

Curator was used to curate [Bespoke-Stratos-17k](https://huggingface.co/datasets/bespokelabs/Bespoke-Stratos-17k), a reasoning dataset to train a fully open reasoning model [Bespoke-Stratos](https://www.bespokelabs.ai/blog/bespoke-stratos-the-unreasonable-effectiveness-of-reasoning-distillation).

|

||||

|

||||

|

||||

### Curator supports:

|

||||

|

||||

- Calling Deepseek API for scalable synthetic data curation

|

||||

- Easy structured data extraction

|

||||

- Caching and automatic recovery

|

||||

- Dataset visualization

|

||||

- Saving $$$ using batch mode

|

||||

|

||||

### Call Deepseek API with Curator easily:

|

||||

|

||||

|

||||

|

||||

# Get Started here

|

||||

|

||||

- [Colab Example](https://colab.research.google.com/drive/1Z78ciwHIl_ytACzcrslNrZP2iwK05eIF?usp=sharing)

|

||||

- [Github Repo](https://github.com/bespokelabsai/curator)

|

||||

- [Documentation](https://docs.bespokelabs.ai/)

|

||||

- [Discord](https://discord.com/invite/KqpXvpzVBS)

|

||||

29

docs/curator/README_cn.md

Normal file

29

docs/curator/README_cn.md

Normal file

|

|

@ -0,0 +1,29 @@

|

|||

|

||||

|

||||

|

||||

# [Curator](https://github.com/bespokelabsai/curator)

|

||||

|

||||

|

||||

Curator 是一个用于后训练大型语言模型 (LLMs) 和结构化数据提取的制作与管理可扩展的数据集的开源工具。

|

||||

|

||||

Curator 被用来制作 [Bespoke-Stratos-17k](https://huggingface.co/datasets/bespokelabs/Bespoke-Stratos-17k),这是一个用于训练完全开源的推理模型 [Bespoke-Stratos](https://www.bespokelabs.ai/blog/bespoke-stratos-the-unreasonable-effectiveness-of-reasoning-distillation) 的推理数据集。

|

||||

|

||||

|

||||

### Curator 支持:

|

||||

|

||||

- 调用 Deepseek API 进行可扩展的合成数据管理

|

||||

- 简便的结构化数据提取

|

||||

- 缓存和自动恢复

|

||||

- 数据集可视化

|

||||

- 使用批处理模式节省费用

|

||||

|

||||

### 轻松使用 Curator 调用 Deepseek API:

|

||||

|

||||

|

||||

|

||||

# 从这里开始

|

||||

|

||||

- [Colab 示例](https://colab.research.google.com/drive/1Z78ciwHIl_ytACzcrslNrZP2iwK05eIF?usp=sharing)

|

||||

- [Github 仓库](https://github.com/bespokelabsai/curator)

|

||||

- [文档](https://docs.bespokelabs.ai/)

|

||||

- [Discord](https://discord.com/invite/KqpXvpzVBS)

|

||||

33

docs/superagentx/README.md

Normal file

33

docs/superagentx/README.md

Normal file

|

|

@ -0,0 +1,33 @@

|

|||

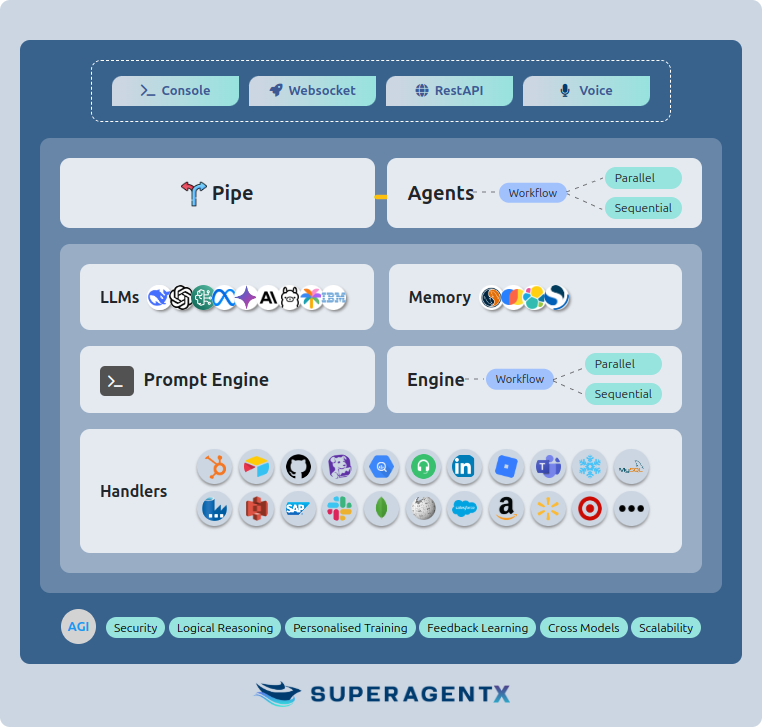

# `SuperAgentX`

|

||||

|

||||

> 🤖 SuperAgentX: A lightweight autonomous true multi-agent framework with AGI capabilities.

|

||||

|

||||

**SuperAgentX Source Code**: [https://github.com/superagentxai/superagentx](https://github.com/superagentxai/superagentx)

|

||||

|

||||

**DeepSeek AI Agent Example**: [https://github.com/superagentxai/superagentx/blob/master/tests/llm/test_deepseek_client.py](https://github.com/superagentxai/superagentx/blob/master/tests/llm/test_deepseek_client.py)

|

||||

|

||||

**Documentation** : [https://docs.superagentx.ai/](https://docs.superagentx.ai/)

|

||||

|

||||

The SuperAgentX framework integrates DeepSeek as its LLM service provider, enhancing the multi-agent's reasoning and decision-making capabilities.

|

||||

|

||||

## 🤖 Introduction

|

||||

|

||||

`SuperAgentX` SuperAgentX is an advanced agentic AI framework designed to accelerate the development of Artificial General Intelligence (AGI). It provides a powerful, modular, and flexible platform for building autonomous AI agents capable of executing complex tasks with minimal human intervention.

|

||||

|

||||

|

||||

|

||||

### ✨ Key Features

|

||||

|

||||

🚀 Open-Source Framework: A lightweight, open-source AI framework built for multi-agent applications with Artificial General Intelligence (AGI) capabilities.

|

||||

|

||||

🎯 Goal-Oriented Multi-Agents: This technology enables the creation of agents with retry mechanisms to achieve set goals. Communication between agents is Parallel, Sequential, or hybrid.

|

||||

|

||||

🏖️ Easy Deployment: Offers WebSocket, RESTful API, and IO console interfaces for rapid setup of agent-based AI solutions.

|

||||

|

||||

♨️ Streamlined Architecture: Enterprise-ready scalable and pluggable architecture. No major dependencies; built independently!

|

||||

|

||||

📚 Contextual Memory: Uses SQL + Vector databases to store and retrieve user-specific context effectively.

|

||||

|

||||

🧠 Flexible LLM Configuration: Supports simple configuration options of various Gen AI models.

|

||||

|

||||

🤝🏻 Extendable Handlers: Allows integration with diverse APIs, databases, data warehouses, data lakes, IoT streams, and more, making them accessible for function-calling features.

|

||||

BIN

docs/superagentx/assets/architecture.png

Normal file

BIN

docs/superagentx/assets/architecture.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 99 KiB |

Loading…

Add table

Add a link

Reference in a new issue